The essay

Nick Grandage on the place of the universe in the scheme of things | Issue 20 | 2022

Artificial intelligence can mimic the human capacity for deep learning, within a limited—narrow—set of circumstances. The human brain is a general processor with no such limitations. Without being aware of it, we are all engaging in deep learning through every moment and experience in life.

What can we learn from that to apply to the creation of artificial general intelligence? And how does the brain’s ability to rewire itself help us to survive?

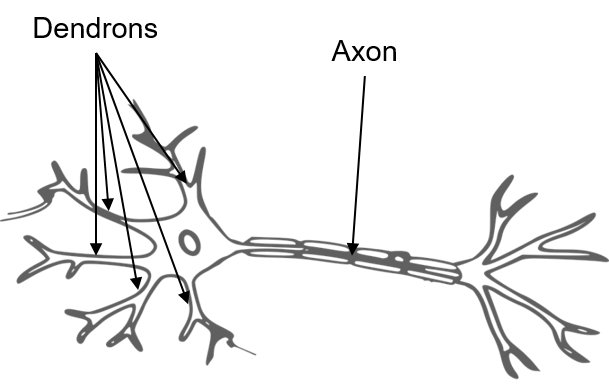

The human brain is the very model of power and efficiency. It can be said to rival the computing power of room-sized supercomputers while using one-third of the power of a 60 watt lightbulb. These capabilities come from the assembly of tens of billions of cells—called neurons—into a vastly interconnected network. Neurons have numerous branch-like structures called dendrons (the Greek for tree) that receive chemical signals from other neurons. These signals are converted into an electrical impulse that travels through the body of a neuron and down a single thread-like structure called an axon. When the impulse reaches the terminus of the axon, it causes the release of neurotransmitters that diffuse across the gap between the axon and dendrons of neighbouring neurons, propagating the signal.

The brain changes according to the information processed, becoming more adept over time at performing the same task: practice makes perfect. In 1949, the Canadian psychologist Donald Hebb proposed the physiological basis for this phenomenon: an increase in axon activity of one neuron near another results in a change in one or both neurons that increases efficiency and strengthens connections. This capacity to connect is fostered by guidance proteins; dendrons and axons are attracted to or repelled by these proteins and grow towards or away from increasing concentrations of these proteins.

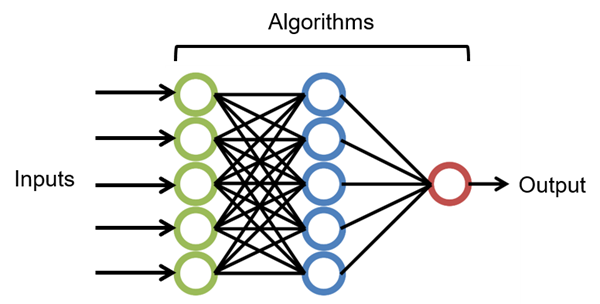

The brain’s network of neurons provided a model for the later development of AI systems composed of neural networks of interconnected algorithms. Changing the output of the algorithms in one layer of a neural network can alter the output of the algorithms in the subsequent layer. This permits the use of neural networks to analyze complex data sets with numerous variables.

Hebb’s work gave the world a methodology for training neural networks by feeding them particular data sets. A neural network with more than three layers is capable of deep learning: this means that it is less dependent on human intervention and is capable of identifying patterns in unlabelled data sets. Deep learning is used to power many of the AI systems encountered in daily life such as predictive search engines, translation tools, and voice and image recognition.

This type of AI—known as ‘artificial narrow intelligence’—can be more efficient than humans at complex tasks, especially in processing and analyzing very large data sets. But ANI is limited to a specific task; and the brain is a general processor with no such limitations. Humans can aptly handle previously unknown situations and tasks, even when they differ greatly from the precedent. This is due to the brain’s ability to weaken existing connections and to form new connections, using the same guidance proteins.

AI with this type of general processing capability is known as ‘artificial general intelligence’ and is one of the frontiers in AI research. There is a debate as to whether AGI is even feasible. But given the brain’s ability to adapt to novel situations, developing AGI may be just another challenge that humans are more than capable of overcoming, perhaps aided by a little inspiration from the brain’s own rewiring mechanisms. This way lies our survival.

Dr Jonathan Chong is an intellectual property, cybersecurity and privacy lawyer in Montréal. He has degrees in chemistry and biochemistry. His research career included hydrogen storage materials, bio-based materials and renewable replacements for feedstocks derived from fossil fuels.

—Watercolour by Henrietta Scott—

© Norton Rose Fulbright LLP 2025